Selección de características mediante importancia de características

En el último ejercicio, practicaste cómo los métodos de filtro y de envoltura pueden ser útiles al seleccionar características en Machine Learning y en entrevistas de Machine Learning. En este ejercicio, practicarás métodos de selección de características usando la importancia de características incorporada en algoritmos de Machine Learning basados en árboles sobre el DataFrame diabetes.

Aunque en DataCamp solo hay tiempo y espacio para practicar con algunos de ellos, en la web de scikit-learn hay una excelente documentación que repasa varias otras formas de seleccionar características.

La matriz de características y el vector objetivo están guardados en tu espacio de trabajo como X y y, respectivamente.

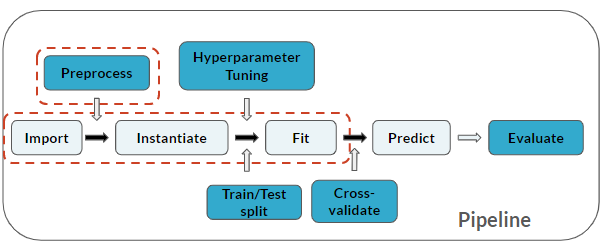

Recuerda que la selección de características se considera un paso de preprocesamiento:

Este ejercicio forma parte del curso

Practicing Machine Learning Interview Questions in Python

Ejercicio interactivo práctico

Prueba este ejercicio y completa el código de muestra.

# Import

from sklearn.ensemble import ____

# Instantiate

rf_mod = ____(max_depth=2, random_state=123,

n_estimators=100, oob_score=True)

# Fit

rf_mod.____(____, ____)

# Print

print(diabetes.columns)

print(rf_mod.____)