Featureauswahl über Feature-Wichtigkeit

In der letzten Übung hast du geübt, wie Filter- und Wrapper-Methoden bei der Auswahl von Features in der Praxis und im Vorstellungsgespräch helfen können. In dieser Übung trainierst du die Featureauswahl mit der integrierten Feature-Wichtigkeit von baumbasierten Machine-Learning-Algorithmen auf dem diabetes-DataFrame.

Auch wenn auf DataCamp nur Zeit und Platz für einige wenige Methoden ist, gibt es auf der scikit-learn-Website ausgezeichnete Dokumentation, die weitere Ansätze zur Featureauswahl erläutert.

Die Feature-Matrix und das Ziel-Array sind als X bzw. y in deinem Workspace gespeichert.

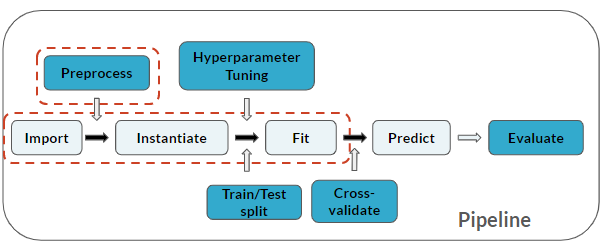

Denk daran: Featureauswahl gilt als Preprocessing-Schritt:

Diese Übung ist Teil des Kurses

ML-Vorstellungsgespräche in Python üben

Interaktive Übung

Vervollständige den Beispielcode, um diese Übung erfolgreich abzuschließen.

# Import

from sklearn.ensemble import ____

# Instantiate

rf_mod = ____(max_depth=2, random_state=123,

n_estimators=100, oob_score=True)

# Fit

rf_mod.____(____, ____)

# Print

print(diabetes.columns)

print(rf_mod.____)