Sélection de variables via l’importance des variables

Dans le dernier exercice, vous avez vu comment les méthodes de type filtre et emballage peuvent aider à sélectionner des variables en Machine Learning, y compris lors d’entretiens techniques. Dans cet exercice, vous allez pratiquer des méthodes de sélection de variables en utilisant l’importance des variables intégrée aux algorithmes d’arbres en Machine Learning, sur le DataFrame diabetes.

Même si nous n’avons le temps de pratiquer que quelques-unes d’entre elles sur DataCamp, une excellente documentation sur le site de scikit-learn présente plusieurs autres façons de sélectionner des variables.

La matrice de caractéristiques et le vecteur cible sont disponibles dans votre espace de travail sous les noms X et y.

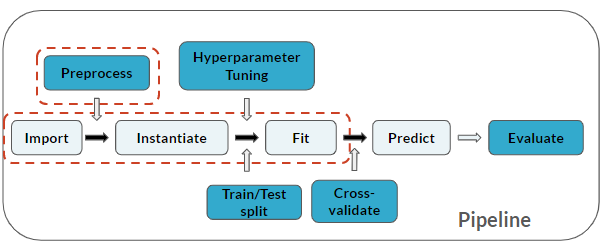

Rappelez-vous que la sélection de variables est une étape de prétraitement :

Cet exercice fait partie du cours

S’entraîner aux questions d’entretien en Machine Learning avec Python

Exercice interactif pratique

Essayez cet exercice en complétant cet exemple de code.

# Import

from sklearn.ensemble import ____

# Instantiate

rf_mod = ____(max_depth=2, random_state=123,

n_estimators=100, oob_score=True)

# Fit

rf_mod.____(____, ____)

# Print

print(diabetes.columns)

print(rf_mod.____)