Implémenter la règle de mise à jour SARSA

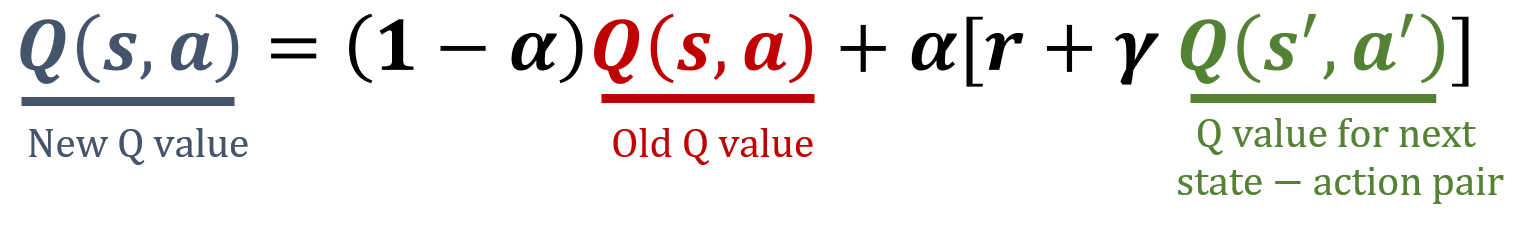

SARSA est un algorithme on-policy en RL qui met à jour la fonction valeur d’action en fonction de l’action effectivement réalisée et de l’action sélectionnée dans l’état suivant. Cette méthode permet d’apprendre la valeur non seulement du couple état–action courant, mais aussi du suivant, afin d’apprendre des politiques qui prennent en compte les actions futures. La règle de mise à jour SARSA est indiquée ci-dessous ; votre tâche consiste à implémenter une fonction qui met à jour une table Q sur cette base.

La bibliothèque NumPy a été importée sous le nom np.

Cet exercice fait partie du cours

Reinforcement Learning avec Gymnasium en Python

Instructions

- Récupérez la valeur Q actuelle pour le couple état–action donné.

- Trouvez la valeur Q pour le couple état–action suivant.

- Mettez à jour la valeur Q du couple état–action courant à l’aide de la formule SARSA.

- Mettez à jour la table Q

Q, en supposant qu’un agent effectue l’action0dans l’état0, reçoit une récompense de5, passe à l’état1et réalise l’action1.

Exercice interactif pratique

Essayez cet exercice en complétant cet exemple de code.

def update_q_table(state, action, reward, next_state, next_action):

# Get the old value of the current state-action pair

old_value = ____

# Get the value of the next state-action pair

next_value = ____

# Compute the new value of the current state-action pair

Q[(state, action)] = ____

alpha = 0.1

gamma = 0.8

Q = np.array([[10,0],[0,20]], dtype='float32')

# Update the Q-table for the ('state1', 'action1') pair

____

print(Q)