Appliquer Expected SARSA

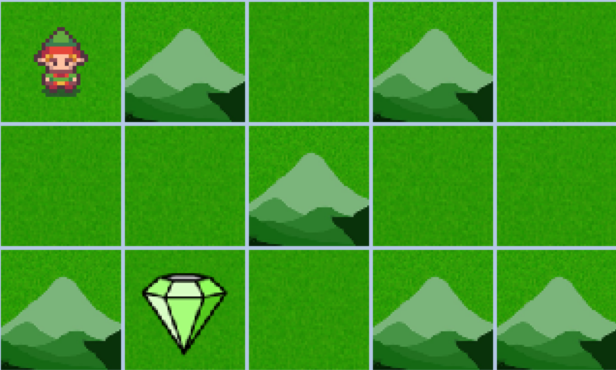

Vous allez maintenant appliquer l’algorithme Expected SARSA dans un environnement personnalisé comme ci-dessous, où l’objectif est de faire naviguer un agent sur une grille pour atteindre la cible le plus rapidement possible. Les mêmes règles qu’auparavant s’appliquent : l’agent reçoit une récompense de +10 en atteignant le diamant, -2 en passant par une montagne, et -1 pour tout autre état.

L’environnement a été importé sous le nom env.

Cet exercice fait partie du cours

Reinforcement Learning avec Gymnasium en Python

Instructions

- Initialisez la table Q

Qavec des zéros pour chaque paire état–action. - Mettez à jour la table Q à l’aide de la fonction

update_q_table(). - Extrayez la policy sous forme de dictionnaire à partir de la table Q apprise.

Exercice interactif pratique

Essayez cet exercice en complétant cet exemple de code.

# Initialize the Q-table with random values

Q = ____

for i_episode in range(num_episodes):

state, info = env.reset()

done = False

while not done:

action = env.action_space.sample()

next_state, reward, done, truncated, info = env.step(action)

# Update the Q-table

____

state = next_state

# Derive policy from Q-table

policy = {state: ____ for state in range(____)}

render_policy(policy)