Generación de leyendas de tuberías

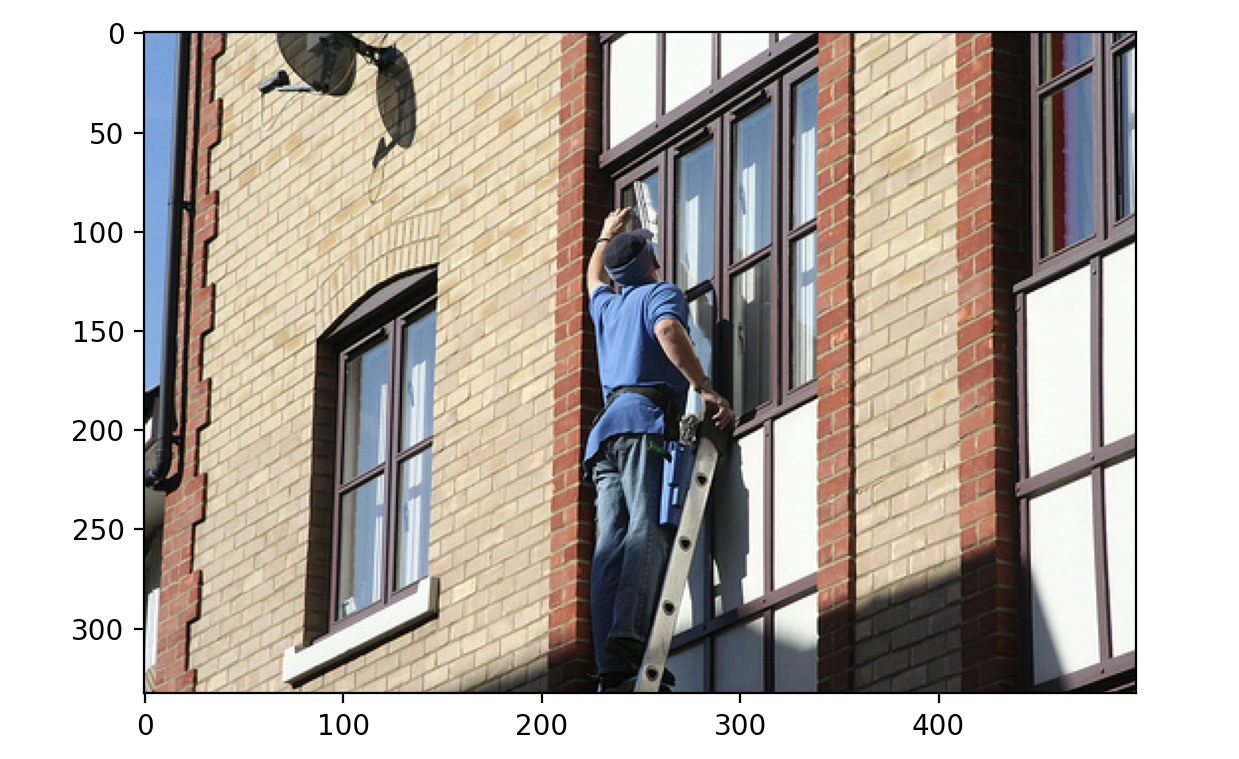

En este ejercicio, volverás a utilizar el conjunto de datos flickr, que contiene 30 000 imágenes y sus correspondientes pies de foto. Ahora generarás un pie de foto para la siguiente imagen utilizando un pipeline en lugar de las clases automáticas.

El conjunto de datos (dataset) se ha cargado con la siguiente estructura:

Dataset({

features: ['image', 'caption', 'sentids', 'split', 'img_id', 'filename'],

num_rows: 10

})

Se ha cargado el módulo pipeline (pipeline).

Este ejercicio forma parte del curso

Modelos multimodales con Hugging Face

Instrucciones del ejercicio

- Carga el pipeline

image-to-textcon el modelo preentrenadoSalesforce/blip-image-captioning-base. - Utiliza la tubería para generar un pie de foto para la imagen en el índice

3.

Ejercicio interactivo práctico

Prueba este ejercicio y completa el código de muestra.

# Load the image-to-text pipeline

pipe = pipeline(task="____", model="____")

# Use the pipeline to generate a caption with the image of datapoint 3

pred = ____(dataset[3]["____"])

print(pred)