Mit Gradienten optimieren

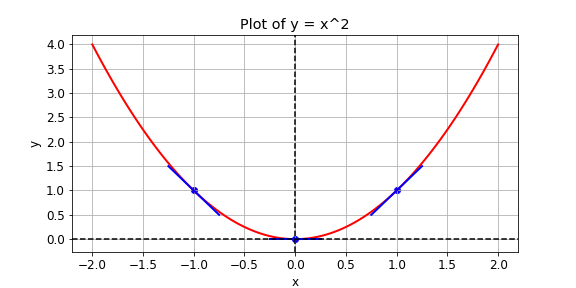

Gegeben ist eine Verlustfunktion \(y = x^{2}\), die du minimieren möchtest. Das gelingt, indem du die Steigung mit GradientTape() bei verschiedenen Werten von x berechnest. Ist die Steigung positiv, kannst du den Verlust verringern, indem du x verkleinerst. Ist sie negativ, verringerst du ihn, indem du x vergrößerst. So funktioniert Gradientenabstieg.

In der Praxis verwendest du eine High-Level-Operation von tensorflow, um den Gradientenabstieg automatisch auszuführen. In dieser Übung berechnest du jedoch die Steigung bei x-Werten von -1, 1 und 0. Folgende Operationen stehen dir zur Verfügung: GradientTape(), multiply() und Variable().

Diese Übung ist Teil des Kurses

Einführung in TensorFlow mit Python

Anleitung zur Übung

- Definiere

xals Variable mit dem Anfangswertx0. - Setze die Verlustfunktion

ygleichxmalx. Verwende dabei kein Operator Overloading. - Lege fest, dass die Funktion den Gradienten von

ybezüglichxzurückgibt.

Interaktive Übung

Vervollständige den Beispielcode, um diese Übung erfolgreich abzuschließen.

def compute_gradient(x0):

# Define x as a variable with an initial value of x0

x = ____(x0)

with GradientTape() as tape:

tape.watch(x)

# Define y using the multiply operation

y = ____

# Return the gradient of y with respect to x

return tape.gradient(____, ____).numpy()

# Compute and print gradients at x = -1, 1, and 0

print(compute_gradient(-1.0))

print(compute_gradient(1.0))

print(compute_gradient(0.0))