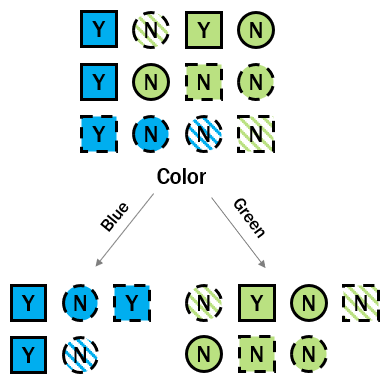

Informationsgewinn der Farbe berechnen

Jetzt, da du die Entropien des Wurzel- und der Kindknoten kennst, kannst du den Informationsgewinn berechnen, den die Farbe liefert.

In den vorherigen Aufgaben hast du entropy_root, entropy_left und entropy_right berechnet. Sie stehen dir in der Konsole zur Verfügung.

Denk daran: Du bildest den gewichteten Durchschnitt der Entropien der Kindknoten. Dafür musst du berechnen, welcher Anteil der ursprünglichen Beobachtungen auf der linken bzw. rechten Seite des Splits gelandet ist. Speichere diese Anteile in p_left bzw. p_right.

Diese Übung ist Teil des Kurses

Dimensionsreduktion in R

Anleitung zur Übung

- Berechne die Split-Gewichte – also den Anteil der Beobachtungen auf jeder Seite des Splits.

- Berechne den Informationsgewinn mithilfe der Gewichte und der Entropien.

Interaktive Übung

Vervollständige den Beispielcode, um diese Übung erfolgreich abzuschließen.

# Calculate the split weights

p_left <- ___/12

p_right <- ___/___

# Calculate the information gain

info_gain <- ___ -

(___ * entropy_left +

p_right * ___)

info_gain