Les dangers des minima locaux

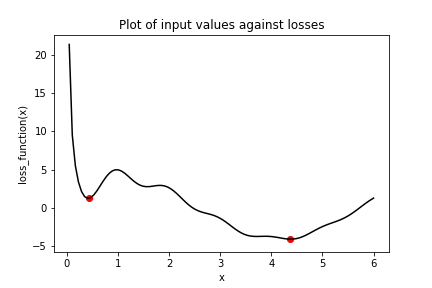

Considérez le tracé de la fonction de perte suivante, loss_function(), qui comporte un minimum global, marqué par le point à droite, et plusieurs minima locaux, dont celui marqué par le point à gauche.

Dans cet exercice, vous allez essayer de trouver le minimum global de loss_function() en utilisant keras.optimizers.SGD(). Vous le ferez deux fois, avec à chaque fois une valeur initiale différente en entrée de loss_function(). D’abord, vous utiliserez x_1, qui est une variable initialisée à 6.0. Ensuite, vous utiliserez x_2, qui est une variable initialisée à 0.3. Notez que loss_function() a été définie et est disponible.

Cet exercice fait partie du cours

Introduction à TensorFlow en Python

Instructions

- Définissez

optpour utiliser l’optimiseur SGD (stochastic gradient descent) avec un taux d’apprentissage de 0.01. - Effectuez la minimisation en utilisant la fonction de perte

loss_function()et la variable initialisée à 6.0,x_1. - Effectuez la minimisation en utilisant la fonction de perte

loss_function()et la variable initialisée à 0.3,x_2. - Affichez

x_1etx_2sous forme de tableauxnumpyet vérifiez si les valeurs diffèrent. Ce sont les minima identifiés par l’algorithme.

Exercice interactif pratique

Essayez cet exercice en complétant cet exemple de code.

# Initialize x_1 and x_2

x_1 = Variable(6.0,float32)

x_2 = Variable(0.3,float32)

# Define the optimization operation

opt = keras.optimizers.____(learning_rate=____)

for j in range(100):

# Perform minimization using the loss function and x_1

opt.minimize(lambda: loss_function(____), var_list=[____])

# Perform minimization using the loss function and x_2

opt.minimize(lambda: ____, var_list=[____])

# Print x_1 and x_2 as numpy arrays

print(____.numpy(), ____.numpy())