Éviter les minima locaux

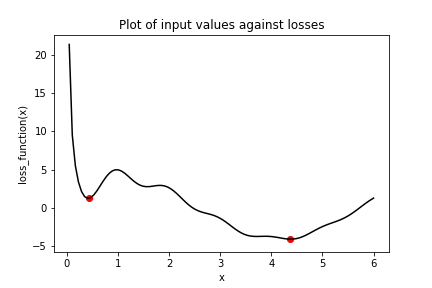

L’exercice précédent a montré à quel point il est facile de rester coincé dans des minima locaux. Nous avions un problème d’optimisation simple à une variable, et la descente de gradient n’a tout de même pas trouvé le minimum global lorsqu’il fallait d’abord traverser des minima locaux. Une façon d’éviter ce problème est d’utiliser le momentum, qui permet à l’optimiseur de franchir les minima locaux. Nous allons à nouveau utiliser la fonction de perte du précédent exercice, qui a été définie et est disponible sous le nom loss_function().

Plusieurs optimiseurs dans tensorflow disposent d’un paramètre de momentum, notamment SGD et RMSprop. Vous utiliserez RMSprop dans cet exercice. Notez que x_1 et x_2 ont été initialisés cette fois à la même valeur. Par ailleurs, keras.optimizers.RMSprop() a également été importé pour vous depuis tensorflow.

Cet exercice fait partie du cours

Introduction à TensorFlow en Python

Instructions

- Configurez l’opération

opt_1avec un taux d’apprentissage (learning_rate) de 0,01 et un momentum de 0,99. - Définissez

opt_2pour utiliser l’optimiseur RMSprop (root mean square propagation) avec un taux d’apprentissage de 0,01 et un momentum de 0,00. - Définissez l’opération de minimisation pour

opt_2. - Affichez

x_1etx_2en tableauxnumpy.

Exercice interactif pratique

Essayez cet exercice en complétant cet exemple de code.

# Initialize x_1 and x_2

x_1 = Variable(0.05,float32)

x_2 = Variable(0.05,float32)

# Define the optimization operation for opt_1 and opt_2

opt_1 = keras.optimizers.RMSprop(learning_rate=____, momentum=____)

opt_2 = ____

for j in range(100):

opt_1.minimize(lambda: loss_function(x_1), var_list=[x_1])

# Define the minimization operation for opt_2

____

# Print x_1 and x_2 as numpy arrays

print(____, ____)