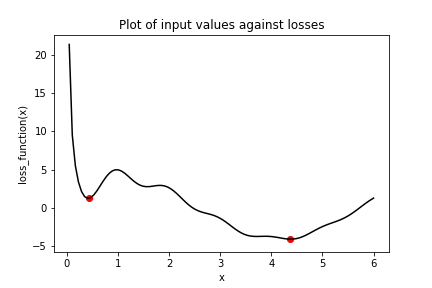

Avoiding local minima

The previous problem showed how easy it is to get stuck in local minima. We had a simple optimization problem in one variable and gradient descent still failed to deliver the global minimum when we had to travel through local minima first. One way to avoid this problem is to use momentum, which allows the optimizer to break through local minima. We will again use the loss function from the previous problem, which has been defined and is available for you as loss_function().

Several optimizers in tensorflow have a momentum parameter, including SGD and RMSprop. You will make use of RMSprop in this exercise. Note that x_1 and x_2 have been initialized to the same value this time. Furthermore, keras.optimizers.RMSprop() has also been imported for you from tensorflow.

This exercise is part of the course

Introduction to TensorFlow in Python

Exercise instructions

- Set the

opt_1operation to use a learning rate of 0.01 and a momentum of 0.99. - Set

opt_2to use the root mean square propagation (RMS) optimizer with a learning rate of 0.01 and a momentum of 0.00. - Define the minimization operation for

opt_2. - Print

x_1andx_2asnumpyarrays.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Initialize x_1 and x_2

x_1 = Variable(0.05,float32)

x_2 = Variable(0.05,float32)

# Define the optimization operation for opt_1 and opt_2

opt_1 = keras.optimizers.RMSprop(learning_rate=____, momentum=____)

opt_2 = ____

for j in range(100):

opt_1.minimize(lambda: loss_function(x_1), var_list=[x_1])

# Define the minimization operation for opt_2

____

# Print x_1 and x_2 as numpy arrays

print(____, ____)