The dangers of local minima

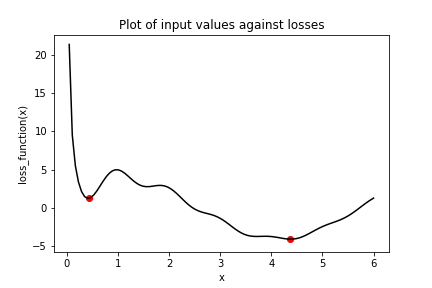

Consider the plot of the following loss function, loss_function(), which contains a global minimum, marked by the dot on the right, and several local minima, including the one marked by the dot on the left.

In this exercise, you will try to find the global minimum of loss_function() using keras.optimizers.SGD(). You will do this twice, each time with a different initial value of the input to loss_function(). First, you will use x_1, which is a variable with an initial value of 6.0. Second, you will use x_2, which is a variable with an initial value of 0.3. Note that loss_function() has been defined and is available.

This exercise is part of the course

Introduction to TensorFlow in Python

Exercise instructions

- Set

optto use the stochastic gradient descent optimizer (SGD) with a learning rate of 0.01. - Perform minimization using the loss function,

loss_function(), and the variable with an initial value of 6.0,x_1. - Perform minimization using the loss function,

loss_function(), and the variable with an initial value of 0.3,x_2. - Print

x_1andx_2asnumpyarrays and check whether the values differ. These are the minima that the algorithm identified.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Initialize x_1 and x_2

x_1 = Variable(6.0,float32)

x_2 = Variable(0.3,float32)

# Define the optimization operation

opt = keras.optimizers.____(learning_rate=____)

for j in range(100):

# Perform minimization using the loss function and x_1

opt.minimize(lambda: loss_function(____), var_list=[____])

# Perform minimization using the loss function and x_2

opt.minimize(lambda: ____, var_list=[____])

# Print x_1 and x_2 as numpy arrays

print(____.numpy(), ____.numpy())