Using the dense layer operation

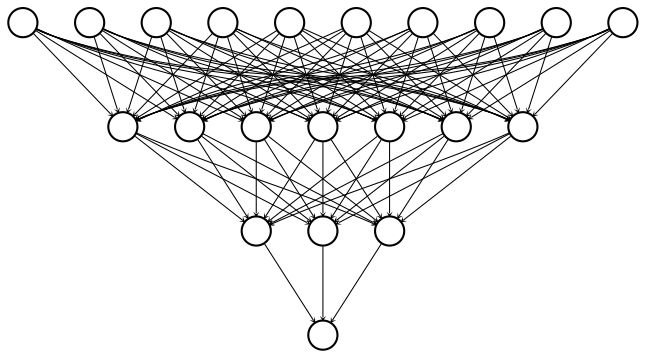

We've now seen how to define dense layers in tensorflow using linear algebra. In this exercise, we'll skip the linear algebra and let keras work out the details. This will allow us to construct the network below, which has 2 hidden layers and 10 features, using less code than we needed for the network with 1 hidden layer and 3 features.

To construct this network, we'll need to define three dense layers, each of which takes the previous layer as an input, multiplies it by weights, and applies an activation function. Note that input data has been defined and is available as a 100x10 tensor: borrower_features. Additionally, the keras.layers module is available.

This exercise is part of the course

Introduction to TensorFlow in Python

Exercise instructions

- Set

dense1to be a dense layer with 7 output nodes and a sigmoid activation function. - Define

dense2to be dense layer with 3 output nodes and a sigmoid activation function. - Define

predictionsto be a dense layer with 1 output node and a sigmoid activation function. - Print the shapes of

dense1,dense2, andpredictionsin that order using the.shapemethod. Why does each of these tensors have 100 rows?

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Define the first dense layer

dense1 = keras.layers.Dense(____, activation='____')(borrower_features)

# Define a dense layer with 3 output nodes

dense2 = ____

# Define a dense layer with 1 output node

predictions = ____

# Print the shapes of dense1, dense2, and predictions

print('\n shape of dense1: ', dense1.shape)

print('\n shape of dense2: ', ____.shape)

print('\n shape of predictions: ', ____.shape)