Optimizing with gradients

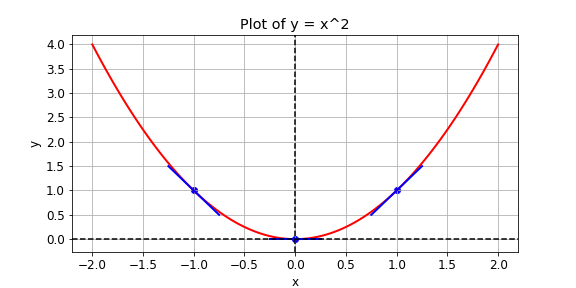

You are given a loss function, \(y = x^{2}\), which you want to minimize. You can do this by computing the slope using the GradientTape() operation at different values of x. If the slope is positive, you can decrease the loss by lowering x. If it is negative, you can decrease it by increasing x. This is how gradient descent works.

In practice, you will use a high level tensorflow operation to perform gradient descent automatically. In this exercise, however, you will compute the slope at x values of -1, 1, and 0. The following operations are available: GradientTape(), multiply(), and Variable().

This exercise is part of the course

Introduction to TensorFlow in Python

Exercise instructions

- Define

xas a variable with the initial valuex0. - Set the loss function,

y, equal toxmultiplied byx. Do not make use of operator overloading. - Set the function to return the gradient of

ywith respect tox.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

def compute_gradient(x0):

# Define x as a variable with an initial value of x0

x = ____(x0)

with GradientTape() as tape:

tape.watch(x)

# Define y using the multiply operation

y = ____

# Return the gradient of y with respect to x

return tape.gradient(____, ____).numpy()

# Compute and print gradients at x = -1, 1, and 0

print(compute_gradient(-1.0))

print(compute_gradient(1.0))

print(compute_gradient(0.0))