Remoção de ruído de áudio

Neste exercício, você vai usar dados do conjunto de dados WHAM, que mistura fala com ruído de fundo, para criar uma nova fala com uma voz diferente e sem o ruído de fundo!

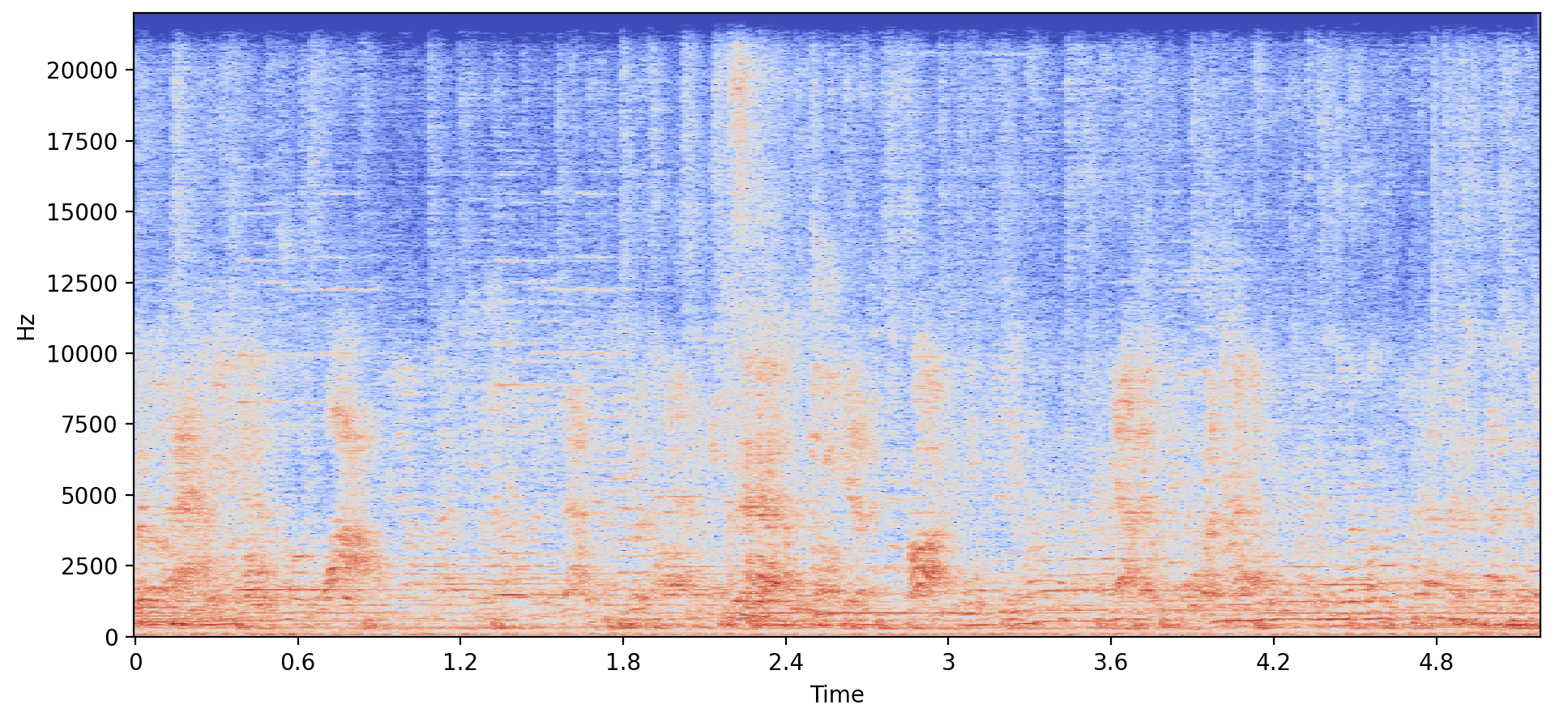

A matriz “ example_speech ” e o vetor “ speaker_embedding ” da nova voz já foram carregados. O pré-processador (processor) e o vocoder (vocoder) também estão disponíveis, junto com o módulo SpeechT5ForSpeechToSpeech. Uma função make_spectrogram() foi criada para ajudar a fazer os gráficos.

Este exercício faz parte do curso

Modelos multimodais com Hugging Face

Instruções do exercício

- Carregue o modelo pré-treinado

SpeechT5ForSpeechToSpeechusando o ponto de verificaçãomicrosoft/speecht5_vc. - Pré-processe

example_speechcom uma taxa de amostragem de16000. - Gere a fala sem ruído usando o

.generate_speech().

Exercício interativo prático

Experimente este exercício completando este código de exemplo.

# Load the SpeechT5ForSpeechToSpeech pretrained model

model = ____

# Preprocess the example speech

inputs = ____(audio=____, sampling_rate=____, return_tensors="pt")

# Generate the denoised speech

speech = ____

make_spectrogram(speech)

sf.write("speech.wav", speech.numpy(), samplerate=16000)