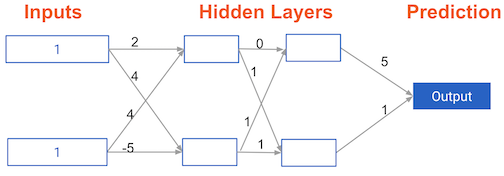

Forward propagation in a deeper network

You now have a model with 2 hidden layers. The values for an input data point are shown inside the input nodes. The weights are shown on the edges/lines. What prediction would this model make on this data point?

Assume the activation function at each node is the identity function. That is, each node's output will be the same as its input. So the value of the bottom node in the first hidden layer is -1, and not 0, as it would be if the ReLU activation function was used.

Deze oefening maakt deel uit van de cursus

Introduction to Deep Learning in Python

Praktische interactieve oefening

Zet theorie om in actie met een van onze interactieve oefeningen.

Begin met trainen

Begin met trainen