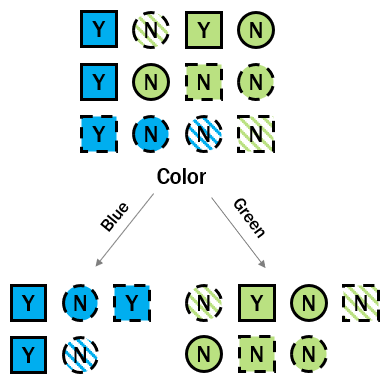

Calculating information gain of color

Now that you know the entropies of the root and child nodes, you can calculate the information gain that color provides.

In the prior exercises, you calculated entropy_root, entropy_left and entropy_right. They are available on the console.

Remember that you will take the weighted average of the child node entropies. So, you will need to calculate what proportion of the original observations ended up on the left and right side of the split. Store those in p_left and p_right, respectively.

Deze oefening maakt deel uit van de cursus

Dimensionality Reduction in R

Oefeninstructies

- Calculate the split weights — that is, the proportion of observations on each side of the split.

- Calculate the information gain using the weights and the entropies.

Praktische interactieve oefening

Probeer deze oefening eens door deze voorbeeldcode in te vullen.

# Calculate the split weights

p_left <- ___/12

p_right <- ___/___

# Calculate the information gain

info_gain <- ___ -

(___ * entropy_left +

p_right * ___)

info_gain