Audio denoising

In this exercise, you will use data from the WHAM dataset, which mixes speech with background noise, to generate new speech in a different voice and with the background noise removed!

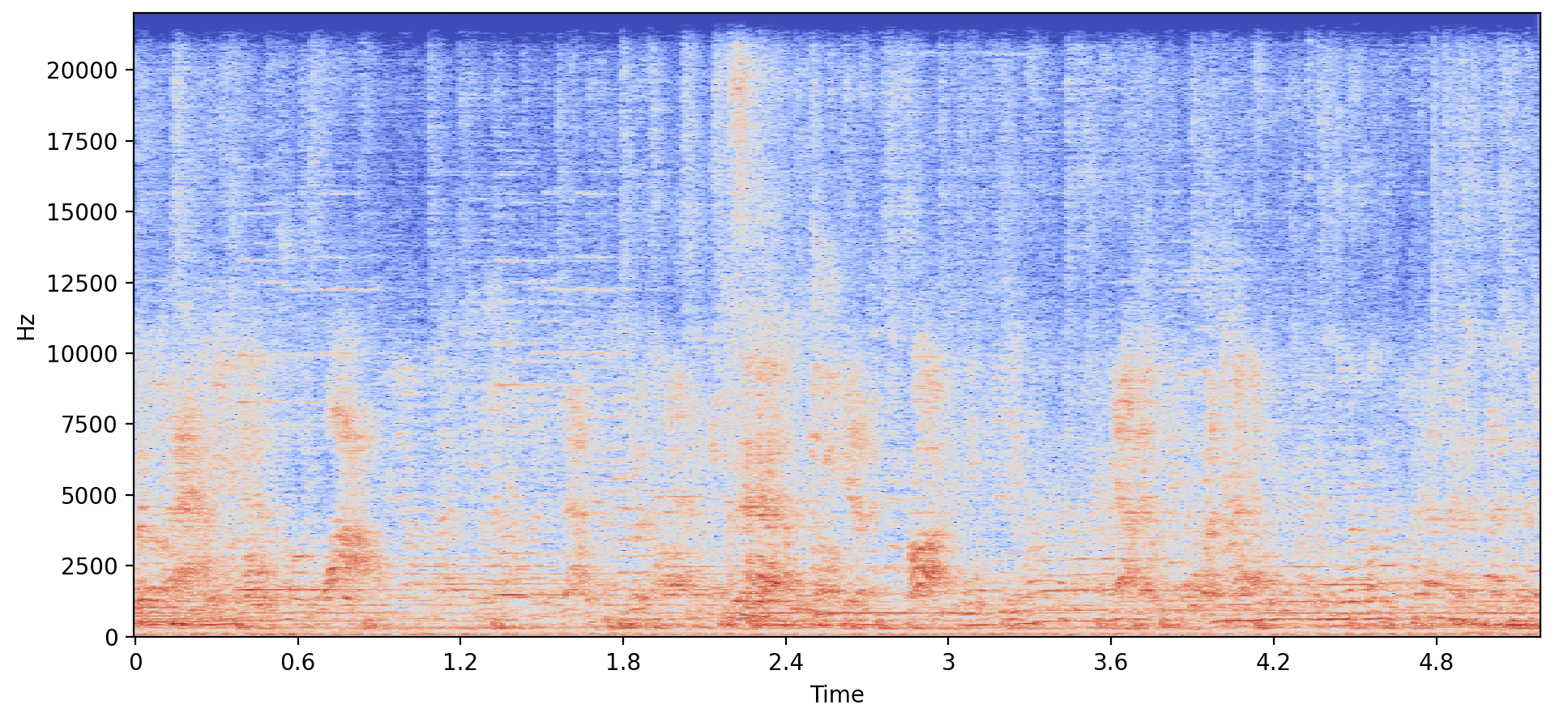

The example_speech array and speaker_embedding vector of the new voice have already been loaded. The preprocessor (processor) and vocoder (vocoder) are also available, along with the SpeechT5ForSpeechToSpeech module. A make_spectrogram() function has been provided to aid with plotting.

This exercise is part of the course

Multi-Modal Models with Hugging Face

Exercise instructions

- Load the

SpeechT5ForSpeechToSpeechpretrained model using themicrosoft/speecht5_vccheckpoint. - Preprocess

example_speechwith a sampling rate of16000. - Generate the denoised speech using the

.generate_speech().

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Load the SpeechT5ForSpeechToSpeech pretrained model

model = ____

# Preprocess the example speech

inputs = ____(audio=____, sampling_rate=____, return_tensors="pt")

# Generate the denoised speech

speech = ____

make_spectrogram(speech)

sf.write("speech.wav", speech.numpy(), samplerate=16000)