Custom image editing

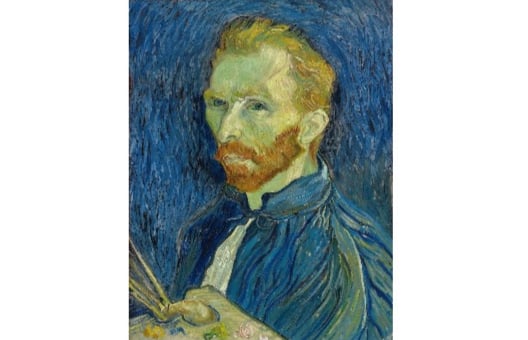

AI image generation is already pretty cool, but some models even support custom image editing, a multi-modal variant of image generation that takes both a text prompt and source image input. Have a go at modifying this famous self-portrait of Van Gogh to be of the cartoon character Snoopy using the StableDiffusionControlNetPipeline:

Note: Inference on diffusion models can take a long time, so we've pre-loaded the generated image for you. Running different prompts will not generated new images.

The Canny filter version of the image has been created for you (canny_image). The StableDiffusionControlNetPipeline and ControlNetModel classes have been imported from the diffusers library. The generator list (generator) has been created.

This exercise is part of the course

Multi-Modal Models with Hugging Face

Exercise instructions

- Load the

ControlNetModelfrom thelllyasviel/sd-controlnet-cannycheckpoint. - Load the

StableDiffusionControlNetPipelinefrom therunwayml/stable-diffusion-v1-5checkpoint, passing thecontrolnetprovided. - Run the pipeline using the

prompt,canny_image, and thenegative_promptandgeneratorprovided.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

## NOTE: no imports are required for this exercise

# Load a ControlNetModel from the pretrained checkpoint

controlnet = ____("____", torch_dtype=torch.float16)

# Load a pretrained StableDiffusionControlNetPipeline using the ControlNetModel

pipe = ____(

"____", controlnet=____, torch_dtype=torch.float16

)

pipe = pipe.to("cuda")

prompt = ["Snoopy, best quality, extremely detailed"]

# Run the pipeline

output = pipe(

____,

____,

negative_prompt=["monochrome, lowres, bad anatomy, worst quality, low quality"],

generator=____,

num_inference_steps=20,

)

plt.imshow(output.images[0])

plt.show()