Image inpainting

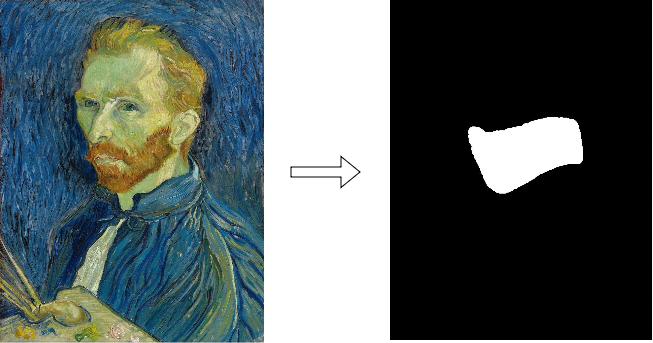

Let's put a spin on multi-modal image generation by combining it with image inpainting. You will modify the self-portrait of Van Gogh to have a black beard using the StableDiffusionControlNetInpaintPipeline and an image mask, which has been created for you (mask_image):

Note: Inference on diffusion models can take a long time, so we've pre-loaded the generated image for you. Running different prompts will not generated new images.

The original version of the image has been loaded as init_image, along with a control image (control_image) created with the make_inpaint_condition() function from the video.

This exercise is part of the course

Multi-Modal Models with Hugging Face

Exercise instructions

- Run the pipeline with a prompt designed to generate a black beard, specifying

num_inference_steps=40, and passing theinit_image,mask_image, andcontrol_image.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Run the pipeline requesting a black beard

output = pipe(

____,

num_inference_steps=____,

eta=1.0,

image=____,

mask_image=____,

control_image=____

)

plt.imshow(output.images[0])

plt.show()