Pipeline caption generation

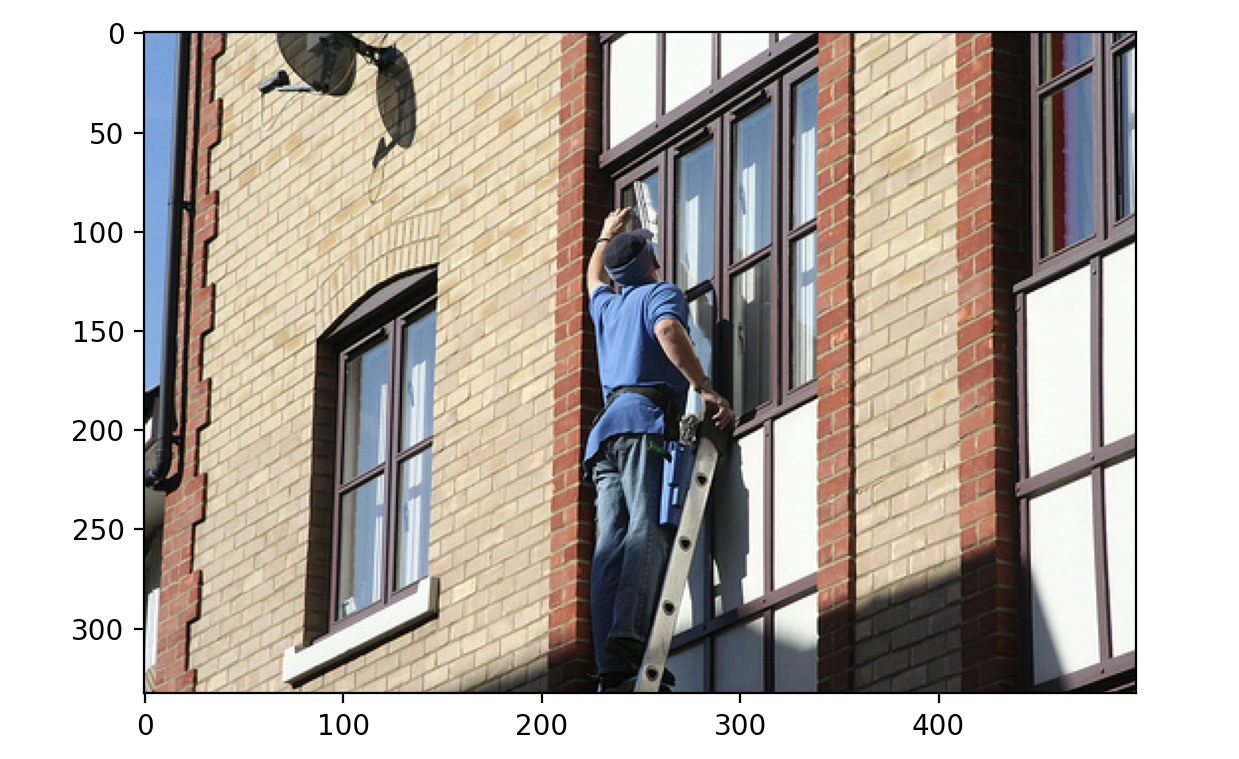

In this exercise, you'll again use flickr dataset, which has 30,000 images and associated captions. Now you'll generate a caption for the following image using a pipeline instead of the auto classes.

The dataset (dataset) has been loaded with the following structure:

Dataset({

features: ['image', 'caption', 'sentids', 'split', 'img_id', 'filename'],

num_rows: 10

})

The pipeline module (pipeline) has been loaded.

This exercise is part of the course

Multi-Modal Models with Hugging Face

Exercise instructions

- Load the

image-to-textpipeline withSalesforce/blip-image-captioning-basepretrained model. - Use the pipeline to generate a caption for the image at index

3.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Load the image-to-text pipeline

pipe = pipeline(task="____", model="____")

# Use the pipeline to generate a caption with the image of datapoint 3

pred = ____(dataset[3]["____"])

print(pred)