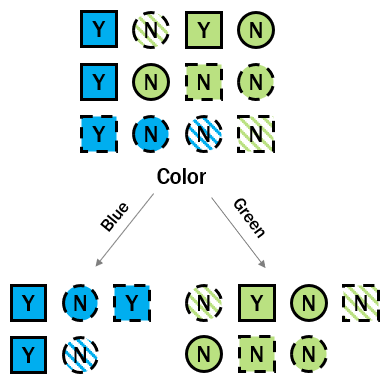

Calcul de l’information gagnée par la couleur

Maintenant que vous connaissez les entropies de la racine et des nœuds enfants, vous pouvez calculer l’information gagnée grâce à la couleur.

Dans les exercices précédents, vous avez calculé entropy_root, entropy_left et entropy_right. Elles sont disponibles dans la console.

Rappelez-vous que vous allez prendre la moyenne pondérée des entropies des nœuds enfants. Vous devrez donc calculer quelle proportion des observations initiales se retrouve à gauche et à droite du découpage. Stockez-les respectivement dans p_left et p_right.

Cet exercice fait partie du cours

Réduction de dimension en R

Instructions

- Calculez les poids du découpage — c’est-à-dire la proportion d’observations de chaque côté du découpage.

- Calculez l’information gagnée en utilisant les poids et les entropies.

Exercice interactif pratique

Essayez cet exercice en complétant cet exemple de code.

# Calculate the split weights

p_left <- ___/12

p_right <- ___/___

# Calculate the information gain

info_gain <- ___ -

(___ * entropy_left +

p_right * ___)

info_gain