Calcul des entropies des nœuds enfants

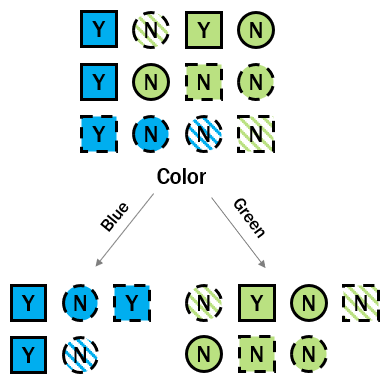

Vous avez réalisé la première étape pour mesurer le gain d’information apporté par la couleur : vous avez calculé le désordre du nœud racine. Vous devez maintenant mesurer l’entropie des nœuds enfants afin de vérifier si le désordre cumulé des nœuds enfants est inférieur à celui du nœud parent. Si c’est le cas, vous avez gagné de l’information sur le statut de défaut de prêt grâce aux informations contenues dans la variable couleur.

Cet exercice fait partie du cours

Réduction de dimension en R

Instructions

- Calculez les probabilités de classe pour la séparation de gauche (côté bleu).

- Calculez l’entropie de la séparation de gauche à partir des probabilités de classe.

- Calculez les probabilités de classe pour la séparation de droite (côté vert).

- Calculez l’entropie de la séparation de droite à partir des probabilités de classe.

Exercice interactif pratique

Essayez cet exercice en complétant cet exemple de code.

# Calculate the class probabilities in the left split

p_left_yes <- ___

p_left_no <- ___

# Calculate the entropy of the left split

entropy_left <- -(___ * ___(___)) +

-(___ * ___(___))

# Calculate the class probabilities in the right split

p_right_yes <- ___

p_right_no <- ___

# Calculate the entropy of the right split

entropy_right <- -(___ * ___(___)) +

-(___ * ___(___))