Bildvorverarbeitung

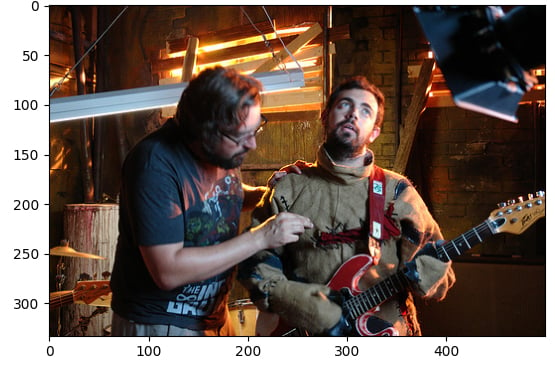

In dieser Übung wirst du den Flickr-Datensatz mit 30.000 Bildern und dazugehörigen Bildunterschriften verwenden, um Vorverarbeitungsoperationen an Bildern durchzuführen. Diese Vorverarbeitung ist nötig, damit die Bilddaten für die Inferenz mit Hugging Face-Modulaufgaben, wie zum Beispiel die Textgenerierung aus Bildern, verwendet werden können. In diesem Fall erstellst du eine Bildunterschrift für dieses Bild:

Der Datensatz (dataset) wurde mit folgender Struktur geladen:

Dataset({

features: ['image', 'caption', 'sentids', 'split', 'img_id', 'filename'],

num_rows: 10

})

Das Bildbeschriftungsmodell (model) wurde geladen.

Diese Übung ist Teil des Kurses

Multimodale Modelle mit Hugging Face

Anleitung zur Übung

- Lade das Bild aus dem Element mit dem Index „

5” des Datensatzes. - Lade den Bildprozessor (

BlipProcessor) des vortrainierten Modells:Salesforce/blip-image-captioning-base. - Führ den Prozessor auf „

image“ aus und gib dabei an, dass PyTorch-Tensoren (pt) gebraucht werden. - Verwende die Methode „

.generate()“, um eine Bildunterschrift mit dem „model“ zu erstellen.

Interaktive Übung

Vervollständige den Beispielcode, um diese Übung erfolgreich abzuschließen.

# Load the image from index 5 of the dataset

image = dataset[5]["____"]

# Load the image processor of the pretrained model

processor = ____.____("Salesforce/blip-image-captioning-base")

# Preprocess the image

inputs = ____(images=____, return_tensors="pt")

# Generate a caption using the model

output = ____(**inputs)

print(f'Generated caption: {processor.decode(output[0])}')

print(f'Original caption: {dataset[5]["caption"][0]}')