Unboxing the SHAP

One of the reasons behind the magic of XAI (Explainable AI) tools like SHAP, is the ability to display not only the overall importance of predictor features behind a model, but also the specific importance and relationship between input features and one specific model's output or prediction.

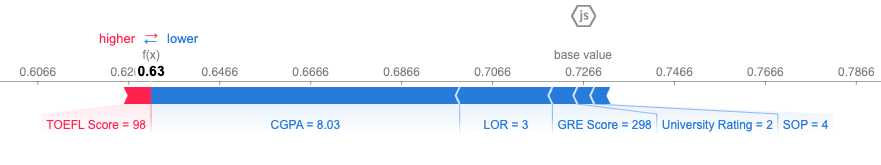

The following plot depicts the importance played by different common U.S. academic test scores in estimating the likelihood of university admission, for a single student admission. The resulting model prediction is a quality level of 0.63 for this student admission decision.

Below are four statements related to feature importance and model behavior in this individual prediction.

One of these statements is False. Can you find it?

This exercise is part of the course

Understanding Artificial Intelligence

Hands-on interactive exercise

Turn theory into action with one of our interactive exercises

Start Exercise

Start Exercise