Multi-layer neural networks

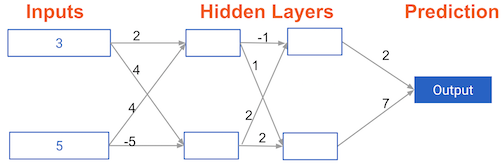

In this exercise, you'll write code to do forward propagation for a neural network with 2 hidden layers. Each hidden layer has two nodes. The input data has been preloaded as input_data. The nodes in the first hidden layer are called node_0_0 and node_0_1. Their weights are pre-loaded as weights['node_0_0'] and weights['node_0_1'] respectively.

The nodes in the second hidden layer are called node_1_0 and node_1_1. Their weights are pre-loaded as weights['node_1_0'] and weights['node_1_1'] respectively.

We then create a model output from the hidden nodes using weights pre-loaded as weights['output'].

This exercise is part of the course

Introduction to Deep Learning in Python

Exercise instructions

- Calculate

node_0_0_inputusing its weightsweights['node_0_0']and the giveninput_data. Then apply therelu()function to getnode_0_0_output. - Do the same as above for

node_0_1_inputto getnode_0_1_output. - Calculate

node_1_0_inputusing its weightsweights['node_1_0']and the outputs from the first hidden layer -hidden_0_outputs. Then apply therelu()function to getnode_1_0_output. - Do the same as above for

node_1_1_inputto getnode_1_1_output. - Calculate

model_outputusing its weightsweights['output']and the outputs from the second hidden layerhidden_1_outputsarray. Do not apply therelu()function to this output.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

def predict_with_network(input_data):

# Calculate node 0 in the first hidden layer

node_0_0_input = (____ * ____).sum()

node_0_0_output = relu(____)

# Calculate node 1 in the first hidden layer

node_0_1_input = ____

node_0_1_output = ____

# Put node values into array: hidden_0_outputs

hidden_0_outputs = np.array([node_0_0_output, node_0_1_output])

# Calculate node 0 in the second hidden layer

node_1_0_input = ____

node_1_0_output = ____

# Calculate node 1 in the second hidden layer

node_1_1_input = ____

node_1_1_output = ____

# Put node values into array: hidden_1_outputs

hidden_1_outputs = np.array([node_1_0_output, node_1_1_output])

# Calculate model output: model_output

model_output = ____

# Return model_output

return(model_output)

output = predict_with_network(input_data)

print(output)