Implementing the SARSA update rule

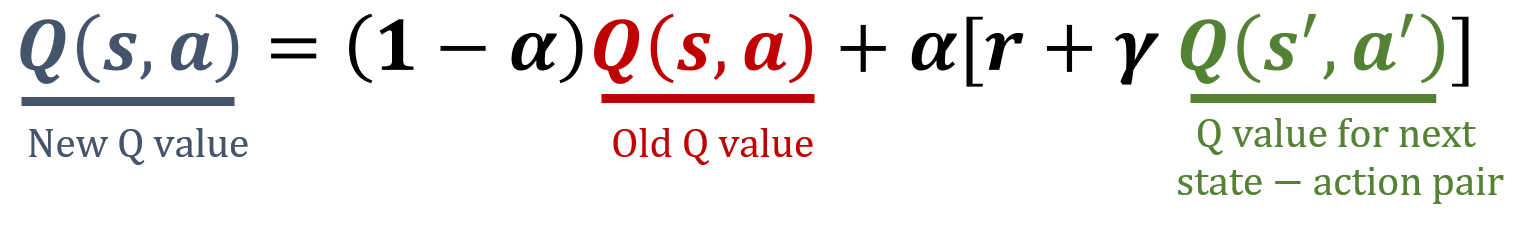

SARSA is an on-policy algorithm in RL that updates the action-value function based on the action taken and the action selected in the next state. This method helps to learn the value of not just the current state-action pair but also the subsequent one, providing a way to learn policies that consider future actions. The SARSA update rule is below, and your task is to implement a function that updates a Q-table based on this rule.

The NumPy library has been imported to you as np.

This exercise is part of the course

Reinforcement Learning with Gymnasium in Python

Exercise instructions

- Retrieve the current Q-value for the given state-action pair.

- Find the Q-value for the next state-action pair.

- Update the Q-value for the current state-action pair using the SARSA formula.

- Update the Q-table

Q, given that an agent takes action0in state0, receives a reward of5, moves to state1, and performs action1.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

def update_q_table(state, action, reward, next_state, next_action):

# Get the old value of the current state-action pair

old_value = ____

# Get the value of the next state-action pair

next_value = ____

# Compute the new value of the current state-action pair

Q[(state, action)] = ____

alpha = 0.1

gamma = 0.8

Q = np.array([[10,0],[0,20]], dtype='float32')

# Update the Q-table for the ('state1', 'action1') pair

____

print(Q)