Computing Q-values

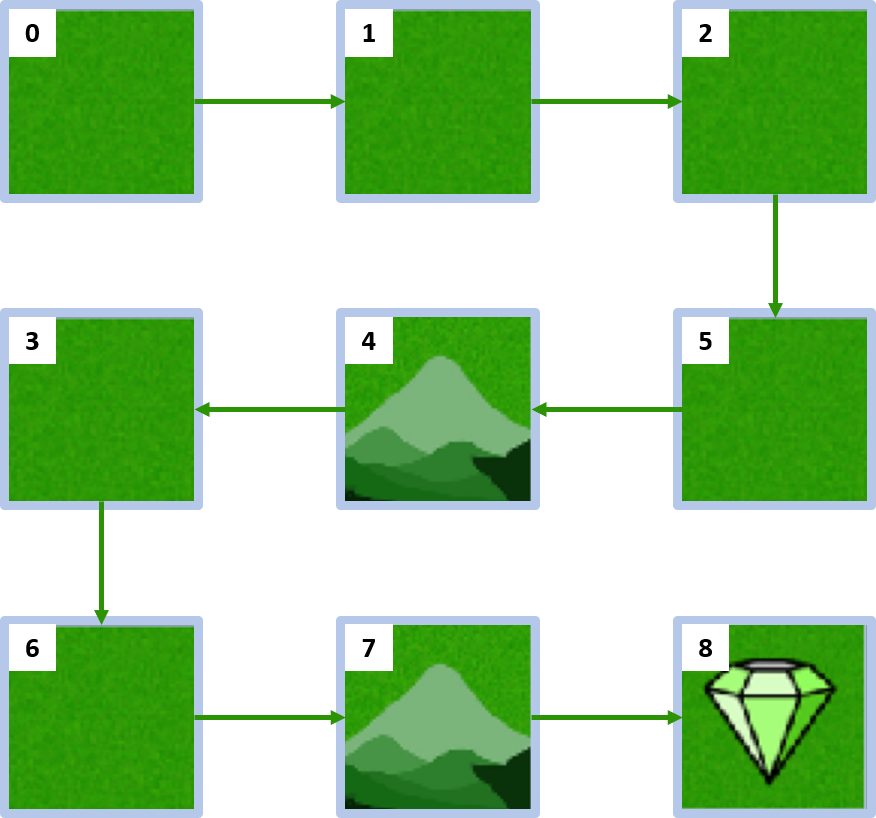

Your goal is to compute the action-values, also known as Q-values, for each state-action pair in the custom MyGridWorld environment when following the below policy. In RL, Q-values are essential because they represent the expected utility of executing a specific action in a given state, followed by adherence to the policy.

The environment has been imported as env along with the compute_state_value() function and the necessary variables needed (terminal_state, num_states, num_actions, policy, gamma).

This exercise is part of the course

Reinforcement Learning with Gymnasium in Python

Exercise instructions

- Complete the

compute_q_value()function to compute the action-value for a givenstateandaction. - Create a dictionary

Qwhere each key represents a state-action pair, and the corresponding value is the Q-value for that pair.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Complete the function to compute the action-value for a state-action pair

def compute_q_value(state, action):

if state == terminal_state:

return None

probability, next_state, reward, done = ____

return ____

# Compute Q-values for each state-action pair

Q = {(____, ____): _____ for ____ in range(____) for ____ in range(____)}

print(Q)