Applying Expected SARSA

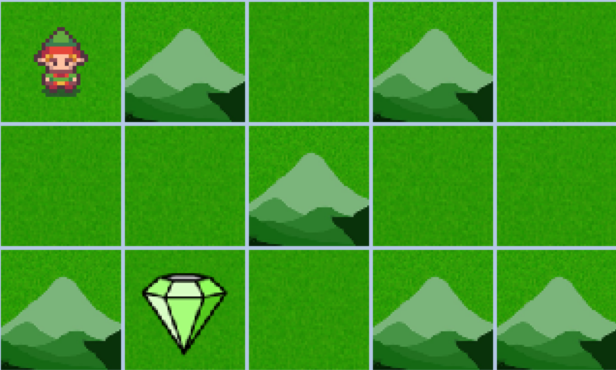

Now you'll apply the Expected SARSA algorithm in a custom environment as shown below, where the goal is to let an agent navigate through a grid, aiming to reach a goal as quickly as possible. The same rules we had before apply: the agent receives a reward of +10 when reaching the diamond, -2 when passing through a mountain, and -1 for every other state.

The environment has been imported as env.

This exercise is part of the course

Reinforcement Learning with Gymnasium in Python

Exercise instructions

- Initialize the Q-table

Qwith zeros for each state-action pair. - Update the Q-table using the

update_q_table()function. - Extract the policy as a dictionary from the learned Q-table.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Initialize the Q-table with random values

Q = ____

for i_episode in range(num_episodes):

state, info = env.reset()

done = False

while not done:

action = env.action_space.sample()

next_state, reward, done, truncated, info = env.step(action)

# Update the Q-table

____

state = next_state

# Derive policy from Q-table

policy = {state: ____ for state in range(____)}

render_policy(policy)