Principal component analysis

In the last 2 chapters, you saw various instances about how to reduce the dimensionality of your dataset including regularization and feature selection. It is important to be able to explain different aspects of reducing dimensionality in a machine learning interview. Large datasets take a long time to compute, and noise in your data can bias your results.

One way of reducing dimensionality is principal component analysis. It's an effective way of reducing the size of the data by creating new features that preserve the most useful information on a dataset while at the same time removing multicollinearity. In this exercise, you will be using the sklearn.decomposition module to perform PCA on the features of the diabetes dataset while isolating the target variable progression.

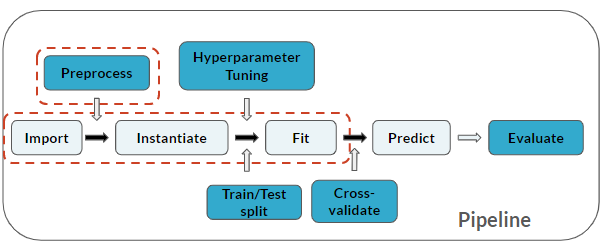

This is where you are in the pipeline:

This exercise is part of the course

Practicing Machine Learning Interview Questions in Python

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Import module

from ____.____ import ____

# Feature matrix and target array

X = ____.____('____', axis=1)

y = ____['____']