Feature selection through feature importance

In the last exercise, you practiced how filter and wrapper methods could be of use when selecting features in machine learning, and in machine learning interviews. In this exercise, you'll practice feature selection methods using the built-in feature importance in tree-based machine learning algorithms on the diabetes DataFrame.

Although there is only time and space to practice with a few of them on DataCamp, there is some excellent documentation available from the scikit-learn website that goes over several other ways to select features.

The feature matrix and target array are saved to your workspace as X and y, respectively.

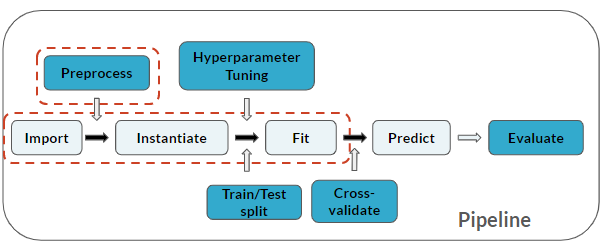

Recall that feature selection is considered a pre-processing step:

This exercise is part of the course

Practicing Machine Learning Interview Questions in Python

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Import

from sklearn.ensemble import ____

# Instantiate

rf_mod = ____(max_depth=2, random_state=123,

n_estimators=100, oob_score=True)

# Fit

rf_mod.____(____, ____)

# Print

print(diabetes.columns)

print(rf_mod.____)