Decision tree

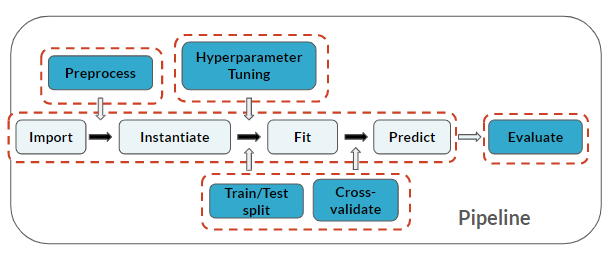

In the last three chapters, you've learned a range of techniques that help you tackle many aspects of the machine learning interview. In this chapter, you'll be introduced to various ways to make sure any model you're asked to create or discuss in a machine learning interview is generalizable, evaluated correctly, and properly selected from among other possible models.

In this exercise, you will delve into hyperparameter tuning for a decision tree on the loan_data dataset.

Here you'll tune min_samples_split, which is the minimum number of samples required to create an additional binary split, and max_depth, which is how deep you want to grow the tree. The deeper a tree, the more splits and therefore captures more information about the data.

The feature matrix X and the target label y have been imported for you.

Note that you're once again performing all of the steps in the machine learning pipeline!

This exercise is part of the course

Practicing Machine Learning Interview Questions in Python

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Import modules

from sklearn.tree import ____

from sklearn.metrics import accuracy_score

# Train/test split

X_train, X_test, y_train, y_test = train_test_split(____, ____, test_size=0.30, random_state=123)

# Instantiate, Fit, Predict

loans_clf = ____()

loans_clf.____(____, ____)

y_pred = loans_clf.____(____)

# Evaluation metric

print("Decision Tree Accuracy: {}".format(____(____,____)))