Aggregation and filtering

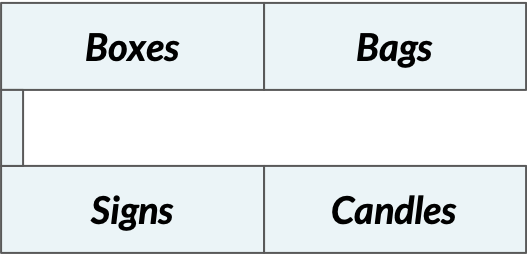

In the video, we helped a gift store manager arrange the sections in her physical retail location according to association rules. The layout of the store forced us to group sections into two pairs of product types. After applying advanced filtering techniques, we proposed the floor layout below.

The store manager is now asking you to generate another floorplan proposal, but with a different criterion: each pair of sections should contain one high support product and one low support product. The data, aggregated, has been aggregated and one-hot encoded for you. Additionally, apriori() and association_rules() have been imported from mlxtend.

This exercise is part of the course

Market Basket Analysis in Python

Exercise instructions

- Generate the set of frequent itemsets with a minimum support threshold to 0.0001.

- Identify all rules with a minimum support threshold of 0.0001.

- Select all rules with an antecedent support greater than 0.35.

- Select all rules with a maximum consequent support lower than 0.35.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Apply the apriori algorithm with a minimum support of 0.0001

frequent_itemsets = apriori(aggregated, ____, use_colnames = True)

# Generate the initial set of rules using a minimum support of 0.0001

rules = association_rules(frequent_itemsets,

metric = "____", min_threshold = ____)

# Set minimum antecedent support to 0.35

rules = rules[____['antecedent support'] > ____]

# Set maximum consequent support to 0.35

rules = rules[____ < 0.35]

# Print the remaining rules

print(rules)