Calculating child entropies

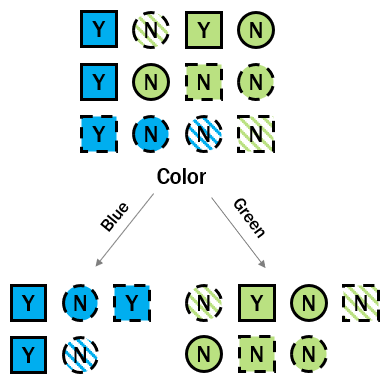

You have completed the first step for measuring the information gain that color provides — you computed the disorder of the root node. Now you need to measure the entropy of the child nodes so that you can assess if the collective disorder of the child nodes is less than the disorder of the parent node. If it is, then you have gained some information about loan default status from the information found in color.

This exercise is part of the course

Dimensionality Reduction in R

Exercise instructions

- Calculate the class probabilities for the left split (blue side).

- Calculate the entropy of left split using the class probabilities.

- Calculate the class probabilities for the left split (green side).

- Calculate the entropy of right split using the class probabilities.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

# Calculate the class probabilities in the left split

p_left_yes <- ___

p_left_no <- ___

# Calculate the entropy of the left split

entropy_left <- -(___ * ___(___)) +

-(___ * ___(___))

# Calculate the class probabilities in the right split

p_right_yes <- ___

p_right_no <- ___

# Calculate the entropy of the right split

entropy_right <- -(___ * ___(___)) +

-(___ * ___(___))