Training the REINFORCE algorithm

You are ready to train your Lunar Lander using REINFORCE! All you need is to implement the REINFORCE training loop, including the REINFORCE loss calculation.

Given that the loss calculation steps span across both the inner and outer loops, you will not use a calculate_loss() function this time.

When the episode if complete, you can use both those quantities to calculate the loss.

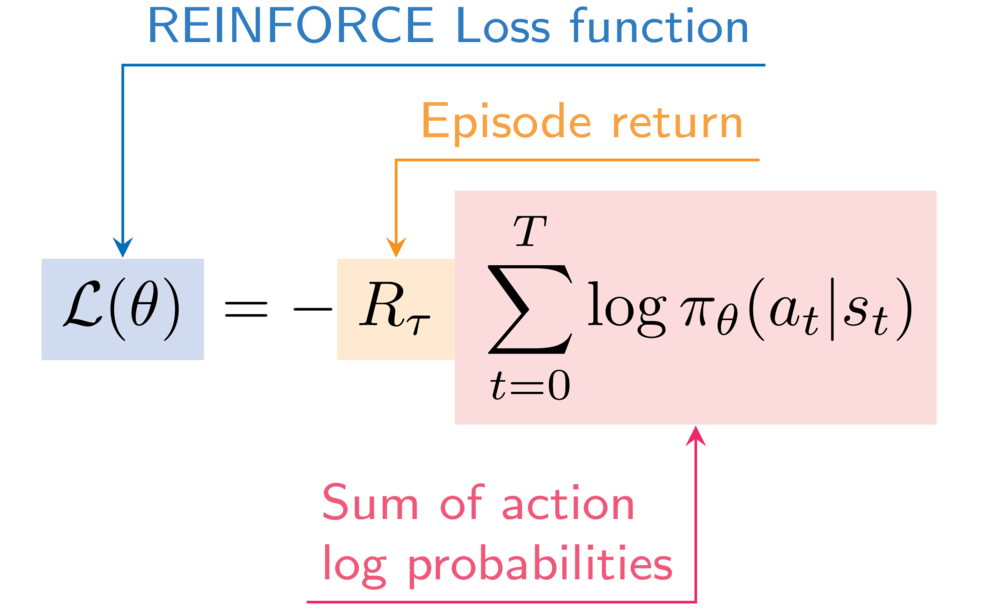

For reference, this is the expression for the REINFORCE loss function:

You will again use the describe_episode() function to print out how your agent is doing at each episode.

This exercise is part of the course

Deep Reinforcement Learning in Python

Exercise instructions

- Append the log probability of the selected action to the episode log probabilities.

- Increment the episode return with the discounted reward of the current step.

- Calculate the REINFORCE episode loss.

Hands-on interactive exercise

Have a go at this exercise by completing this sample code.

for episode in range(50):

state, info = env.reset()

done = False

episode_reward = 0

step = 0

episode_log_probs = torch.tensor([])

R = 0

while not done:

step += 1

action, log_prob = select_action(policy_network, state)

next_state, reward, terminated, truncated, _ = env.step(action)

done = terminated or truncated

episode_reward += reward

# Append to the episode action log probabilities

episode_log_probs = torch.cat((____, ____))

# Increment the episode return

R += (____ ** step) * ____

state = next_state

# Calculate the episode loss

loss = ____ * ____.sum()

optimizer.zero_grad()

loss.backward()

optimizer.step()

describe_episode(episode, reward, episode_reward, step)